Modern architectural approaches promote the development of independent services.

However, even though services are designed to be independent, they cannot just operate in their little bubbles. They need to talk to each other to get things done.

Getting the communication right between services can be challenging.

While there are multiple patterns that developers can leverage, each pattern has some advantages and disadvantages. Also, a lot of times, the choice of pattern depends heavily on the application's context.

In this article, we will look at various service communication patterns and discuss their trade-offs so that you can make the best choice while building your projects.

Challenges with Service Communication

When two services communicate, the interaction takes place between separate processes, often across a network.

This inter-process communication fundamentally differs from method calls within a single process, leading to multiple challenges such as:

1. Performance

In-process method calls benefit from compiler and runtime optimizations, which eliminates the overhead associated with function calls.

However, inter-process communication involves sending packets over the network, introducing higher latency compared to in-process calls. Also, inter-process communication requires data serialization and deserialization which adds to the overhead.

2. Interface Modification

Changing a method signature within an in-process call is straightforward because the interface and code are packaged together. In fact, most modern IDEs are equipped with features that make it easy for developers to make such changes across the code-base.

However, in service communication over the network, interface modification can turn into a rather complex activity. Depending on the type of change, you may need to perform lockstep deployment with consumers or support multiple interface versions simultaneously.

3. Error Handling

Errors are relatively easy to handle within a process boundary by propagating them up the call stack.

With services, error handling becomes more complex due to various scenarios like network timeouts, unavailable services, and resource constraints.

Also, different services might be built using different programming languages and frameworks. You need to follow a proper approach such as using standard HTTP status codes to communicate errors across such services.

4. Versioning and Compatibility

Lastly, maintaining compatibility between different service versions can be challenging.

Changes to message formats, protocols, or APIs need to be carefully managed to avoid breaking existing integrations.

5. Developer Inertia

Developers can fall into the trap of using a one-size-fits-all approach to choosing how these services should communicate.

They might pick a communication method without considering whether it’s the best fit for the problem they want to solve.

Key Communication Patterns

Let us now look at the key service-to-service communication patterns you can use while designing your application.

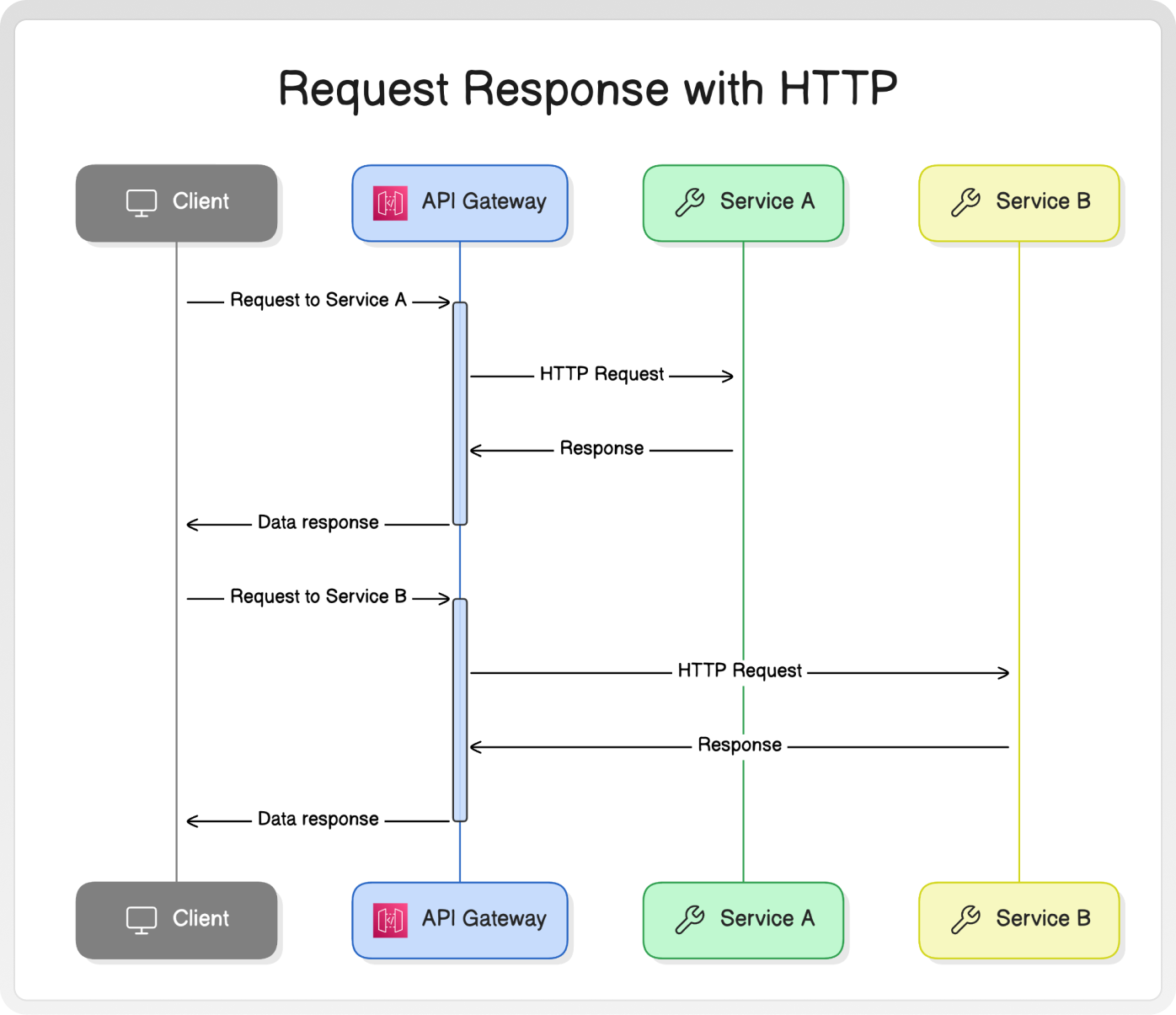

Request Response over HTTP

In this pattern, one service sends a request to another service and remains blocked until it receives a response or encounters an error.

REST, which leverages the HTTP protocol and its methods (GET, POST, PUT, DELETE), is the most widely used architectural style for implementing this type of communication.

The diagram below shows one approach in which this type of communication typically operates using an API Gateway.

While the synchronous request-response pattern is robust and commonly used, there are trade-offs associated with it.

When to Use Synchronous Request Response?

The synchronous request-response pattern is well-suited for situations where you need immediate responses and are fine with the blocking nature of the communication.

It's often used when clients, such as user interfaces, require real-time data from the server. The simplicity and familiarity of this approach makes it a popular choice for many developers.

Drawbacks

The drawbacks of this approach are as follows:

- Cascading Failures: If you have a chain of services relying on synchronous request-response calls, a single service facing issues can cause the entire operation to fail. This is because each service in the chain is dependent on the successful response of its downstream service. If one service in the chain experiences problems or becomes unresponsive, it can trigger a cascading failure throughout the system, leading to overall degraded performance or even complete system unavailability.

- Resource Wastage: When services are blocked waiting for responses, they consume resources such as threads or database connections. If multiple services are stuck in this waiting state simultaneously, it can lead to resource contention and inefficient utilization. This resource wastage can impact the scalability and performance of your system.

- Tighter Coupling: Synchronous request-response introduces temporal coupling between the communicating services. This coupling exists between specific instances of those services. The response is sent back over the same network connection that the caller opened. If the instance of the upstream service dies before receiving the response, the response is lost forever.

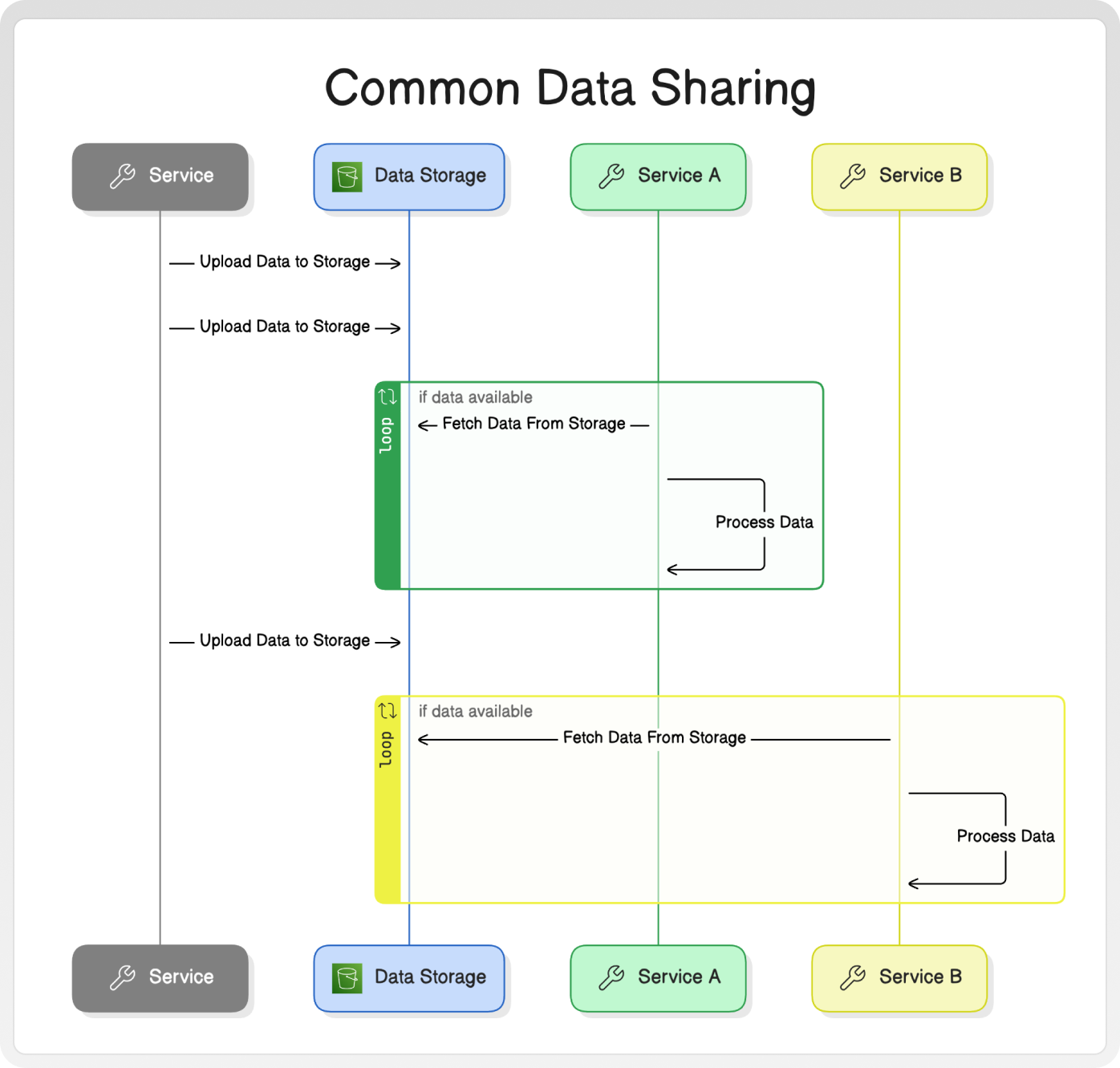

Common Data Sharing

The data-sharing pattern is a communication approach that often goes unnoticed, not because it's rarely used, but because developers may not immediately recognize it as a distinct communication pattern.

In this pattern, one service writes data to a designated location such as an object storage, and another service reads and processes that data.

When to Use the Data-Sharing Pattern

The data-sharing pattern is quite helpful when you need old systems to work with newer, smaller services.

For instance, if you have an older system that only works by sending or receiving files, this pattern allows it to connect smoothly. One service can create files and put them in a shared place, like an object storage, and the legacy system can pick up those files to process them.

This approach is simple and flexible because it can be done with any programming language or tool that can handle files. It's also good for handling large amounts of data when you need to share big files or process millions of records.

Drawbacks

While the data-sharing pattern is great for simplicity and compatibility benefits, it comes with a significant limitation i.e. high latency.

In this pattern, the downstream service typically find out about new data through a polling mechanism or a periodically scheduled job. As a result, there is a delay between the time the data is written and when it is processed by the consuming service.

If you have scenarios that demand low-latency communication between services, the data-sharing pattern may not be a good choice.

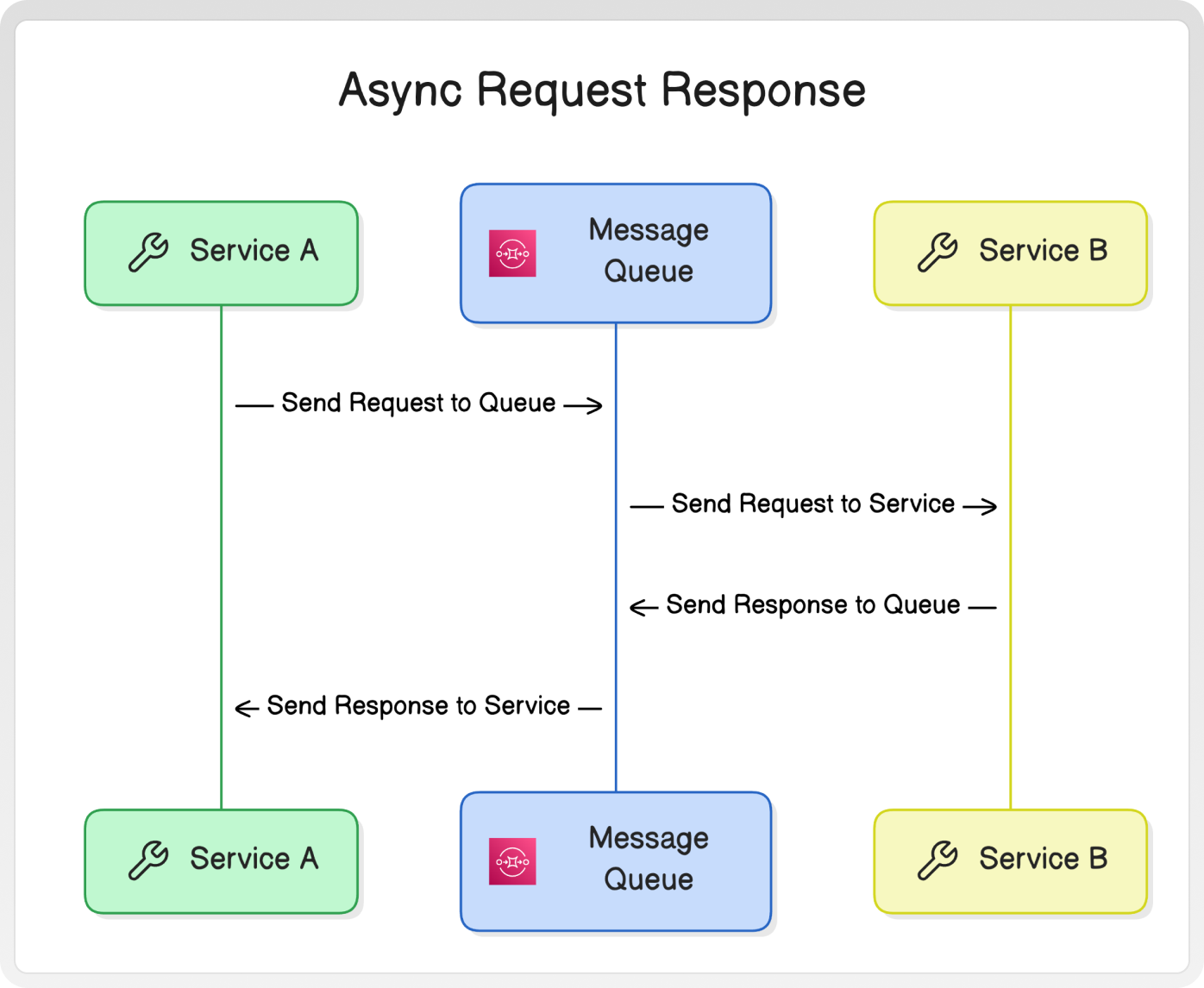

Asynchronous Request Response

The request-response pattern can also be implemented using an asynchronous, non-blocking approach, offering an alternative to the traditional synchronous communication style.

In an asynchronous non-blocking request-response implementation, the receiving service needs to explicitly know the destination for sending the response. This is where message queues come into play. Message queues are ideal for facilitating this type of communication pattern, as they provide a reliable and scalable way to exchange messages between two services.

When to Use Async Request Response?

The key benefit of using message queues is their ability to buffer multiple requests without waiting for responses.

If the receiving service cannot process requests fast enough, the message queue can store the incoming requests until they can be handled.

This buffering capability helps to decouple the services and prevents the calling service from being blocked while waiting for a response.

Drawbacks

While the asynchronous request-response pattern offers several advantages, it also introduces a new challenge: correlating requests with their corresponding responses.

As you might notice, there is no temporal coupling between the services in this approach. The service instance that sends the request may not be the same instance that receives the response.

To address this challenge, you need a way to keep track of the requests and match them with the appropriate responses. One solution is to use a database that is accessible to all instances of the service. Here’s how it works:

- When a request is sent, the calling instance can store a unique identifier in the database.

- When the response is received, the receiving instance can use this identifier to correlate the response with the original request.

It's important to note that a significant amount of time may pass between sending the request and receiving the response. Therefore, the correlation mechanism should be designed to handle scenarios where responses arrive much later than expected.

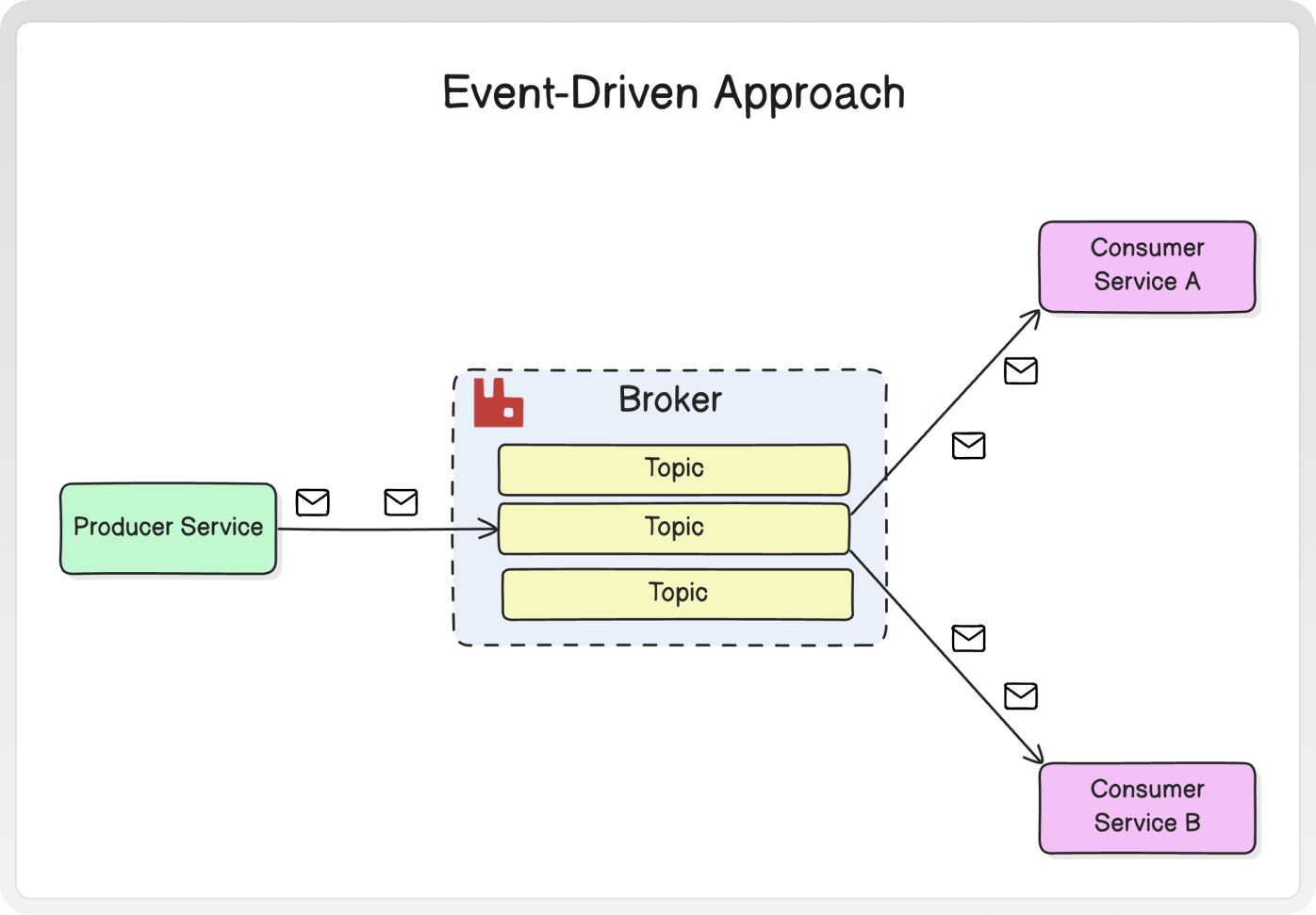

Event-Driven Communication with Pub/Sub

When you're designing services, the event-driven communication pattern offers a unique approach to inter-service interaction.

Unlike direct communication, this pattern revolves around services emitting events that others can consume.

In this pattern, you'll have your services broadcast events without specifying which other services should react to them. An event represents something that has occurred within the context of the emitting service. These events are typically encapsulated in messages, with the event itself serving as the payload.

Message brokers like RabbitMQ can handle the propagation of events. They provide APIs for your producer services to push events and manage subscriptions for the consumer services.

When to Use Event-Driven Communication?

This pattern is particularly useful when you want to:

- Build loosely coupled interactions between the services.

- Broadcast information without specifying downstream services or actions.

- Allow for flexible system expansion without modifying existing services.

Challenges

While event-driven communication offers many benefits, you should also be aware of potential challenges:

- Ensuring reliable event delivery and processing.

- Maintaining event ordering and consistency.

- Managing event schema evolution as your system grows.

- Debugging and tracing event-driven interactions can be more complex than direct communication.

Principles of Effective Service Communication

Having understood the various patterns of service communication, let’s look at some key principles of effective service-to-service communication.

- Loose coupling and high cohesion ensure that services are independent and focused on specific tasks. Communication between such services is done through well-defined APIs or messaging systems, rather than direct calls or shared databases.

- Autonomy allows services to operate and evolve independently. This means that communication between services is not impacted heavily when there is a change in one service.

- Single responsibility keeps the services simple, maintainable, and aligned with specific business capabilities.

Best Practices for Service Communication

When designing and implementing communication between services, there are several best practices you should follow:

- Avoid long synchronous call chains:

- Minimize the use of long chains of synchronous calls between services.

- Long call chains can lead to cascading failures and performance issues if any service in the chain experiences problems or becomes unresponsive.

- Instead, consider breaking down the operations into smaller, independent tasks and leverage asynchronous communication patterns when possible.

- Idempotency:

- Design your services to be idempotent, meaning that multiple identical requests should have the same effect as a single request.

- Idempotency helps in handling duplicate or retried requests gracefully, without causing unintended side effects.

- Proper Error Handling:

- Implement comprehensive error-handling mechanisms in your services.

- Use appropriate error codes and messages to convey the nature and cause of the error to the consuming services.

- Consider implementing retry mechanisms with exponential backoff and circuit breakers to handle transient failures and prevent cascading failures.

- Backward Compatibility:

- Ensure backward compatibility when evolving your service interfaces.

- Use versioning techniques, such as API versioning or content negotiation, to support multiple versions of the interface simultaneously.

- Avoid breaking changes that would require all consumers to update their code simultaneously, as it can be challenging in a distributed system.

- Monitoring and Tracing:

- Implement monitoring and tracing capabilities to gain visibility into the communication between services.

- Use distributed tracing tools to track requests as they flow through multiple services. This is crucial to let you identify performance bottlenecks and discover issues.

- Collect metrics and logs to monitor the health, performance, and usage patterns of the services.

Conclusion

In this article, we’ve explored the key communication patterns for services, including synchronous request-response, asynchronous communication through common data, asynchronous request-response, and event-driven communication.

It's crucial to understand that there is no one-size-fits-all solution when it comes to service communication. The choice of pattern should be based on your specific requirements.

Before deciding on a communication pattern, it's good to evaluate factors like data volume, latency requirements, fault tolerance, and the overall architecture of your system. Consider the trade-offs and select the pattern that aligns best with your goals and constraints.

The key is to strike the right balance.

.svg)

.svg)

.svg)